I am working on channel development of this site, filmsubito.tv, as it has a lot of different contents.

Here's a list of issues I need help with:

1) Separating the two sides of the site "FILM STREAMING - NOVITÀ" and "GLI ULTIMI AGGIUNTI"

from home page, as the patron I did extracts them all together.

It also takes a lot of time to load movies, but if I use

Código: Seleccionar todo

patron = '</span>.*?<a href="(.*?)".*?><img src="(.*?)" width="145"></span><span class="vertical-align"></span></span></a>.*?<p style="font-size:14px;font-weight:bold">(.*?)</p>.*?<p style="font-size:12px;line-height:15px">(.*?)</p>'2) Cant' figure out paginador because there are two with same structure.

3) Extract videos from pages (no need to edit new servers I think, at least)

4) Extract tv shows and page them properly.

5) Paging the genres.

Mostly, I lack of time as I began a new job and need to be earlier on site, and get out later

Any help will be apreciated, here's the job I did until now:

Código: Seleccionar todo

# -*- coding: utf-8 -*-

#------------------------------------------------------------

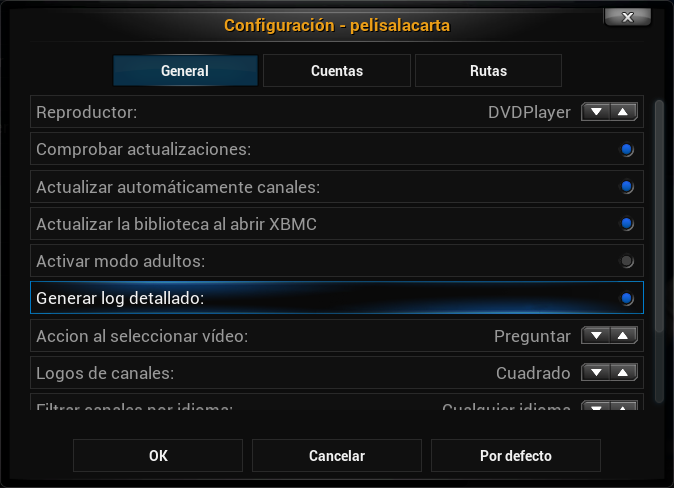

# pelisalacarta - XBMC Plugin

# Canal para filmsubito.tv

# http://blog.tvalacarta.info/plugin-xbmc/pelisalacarta/

#------------------------------------------------------------

import urlparse

import re

import sys

from core import logger

from core import config

from core import scrapertools

from core.item import Item

from servers import servertools

__channel__ = "filmsubitotv"

__category__ = "F,A,S,D"

__type__ = "generic"

__title__ = "FilmSubito.tv"

__language__ = "IT"

sito="http://www.filmsubito.tv/"

DEBUG = config.get_setting("debug")

def isGeneric():

return True

def mainlist(item):

logger.info("pelisalacarta.filmsubitotv mainlist")

itemlist = []

itemlist.append( Item(channel=__channel__, title="[COLOR azure]Home[/COLOR]", action="peliculas", url=sito, thumbnail="http://dc584.4shared.com/img/XImgcB94/s7/13feaf0b538/saquinho_de_pipoca_01"))

itemlist.append( Item(channel=__channel__, title="[COLOR azure]Serie Anni 80[/COLOR]", action="serie80", url=sito ))

itemlist.append( Item(channel=__channel__, title="[COLOR yellow]Cerca...[/COLOR]", action="search", thumbnail="http://dc467.4shared.com/img/fEbJqOum/s7/13feaf0c8c0/Search"))

return itemlist

def serie80(item):

logger.info("pelisalacarta.filmsubitotv categorias")

itemlist = []

data = scrapertools.cache_page(item.url)

logger.info(data)

# The categories are the options for the combo

patron = '<a href="#" class="dropdown-toggle wide-nav-link" data-toggle="dropdown">Serie anni 80<b class="caret"></b></a>.*?<li class.*? ><a title="(.*?)".*?href="(.*?)">.*?</a></li>'

matches = re.compile(patron,re.DOTALL).findall(data)

scrapertools.printMatches(matches)

for scrapedtitle,scrapedurl in matches:

#scrapedurl = urlparse.urljoin(item.url,url)

if (DEBUG): logger.info("title=["+scrapedtitle+"], url=["+scrapedurl+"], thumbnail=["+scrapedthumbnail+"]")

itemlist.append( Item(channel=__channel__, action="peliculas" ,title=scrapedtitle, url=scrapedurl))

return itemlist

def peliculas(item):

logger.info("pelisalacarta.filmsubitotv peliculas")

itemlist = []

# Descarga la pagina

data = scrapertools.cache_page(item.url)

# Extrae las entradas (carpetas)

patron = '</span>.*?<a href="(.*?)".*?><img src="(.*?)".*?width="145"></span><span class="vertical-align"></span></span></a>.*?<p style="font-size:14px;font-weight:bold">(.*?)</p>.*?<p style="font-size:12px;line-height:15px">(.*?)</p>'

matches = re.compile(patron,re.DOTALL).findall(data)

scrapertools.printMatches(matches)

for scrapedurl,scrapedthumbnail,scrapedtitle,scrapedplot in matches:

if (DEBUG): logger.info("title=["+scrapedtitle+"], url=["+scrapedurl+"], thumbnail=["+scrapedthumbnail+"]")

itemlist.append( Item(channel=__channel__, action="findvideos", title=scrapedtitle, url=scrapedurl , thumbnail=scrapedthumbnail , plot=scrapedplot, folder=True, fanart=scrapedthumbnail) )

# Extrae el paginador

patronvideos = '<li class="">.*?<a href="(.*?)">»</a>.*?</li>.*?</ul>'

matches = re.compile(patronvideos,re.DOTALL).findall(data)

scrapertools.printMatches(matches)

if len(matches)>0:

scrapedurl = urlparse.urljoin(item.url,matches[0])

itemlist.append( Item(channel=__channel__, extra=item.extra, action="peliculas", title="[COLOR orange]Successivo>>[/COLOR]" , url=scrapedurl , thumbnail="http://2.bp.blogspot.com/-fE9tzwmjaeQ/UcM2apxDtjI/AAAAAAAAeeg/WKSGM2TADLM/s1600/pager+old.png", folder=True) )

return itemlist

def search(item,texto):

logger.info("[filmsubitotv.py] "+item.url+" search "+texto)

item.url = "http://www.filmsubito.tv/search.php?keywords="+texto

try:

return peliculas(item)

# Se captura la excepción, para no interrumpir al buscador global si un canal falla

except:

import sys

for line in sys.exc_info():

logger.error( "%s" % line )

return []